Frontend System Design of Google Docs (High-Level Design)

A Comprehensive Look at Designing an Interactive, Secure, and Scalable Document Editing Experience

1. Introduction

1.1 Overview of Google Docs

Google Docs is a web-based word processing application that allows multiple users to create, edit, and collaborate on documents in real time. Unlike traditional desktop word processors, Google Docs operates entirely in a web browser and stores documents in the cloud. This means that any changes made by one user can instantly appear for other users, enabling seamless collaboration without the need for constant saving and reloading.

When we type text in Google Docs, we see our changes immediately on the screen. If someone else is editing the same document, we can watch their edits appear almost instantly. This happens because the application uses real-time synchronization to update everyone’s view of the document. Besides just typing text, Google Docs also supports formatting (like bold and italics), inserting images, creating tables, commenting, and many other advanced features.

The beauty of this approach is that we never lose our work—Google Docs continuously saves all our changes. This makes it easy for multiple people to work together on a single document without worrying about merging different versions or emailing documents back and forth. The web-based nature of Google Docs also allows it to run on any device with a modern web browser, including laptops, tablets, and smartphones.

1.2 Why is Google Docs a Challenging Frontend System?

Building an application like Google Docs presents unique challenges, especially on the frontend. Traditional word processors can store and manipulate documents locally, where changes are handled by the software running on a user’s computer. However, Google Docs has to handle these operations in a web environment and deal with multiple people editing the same document at the same time.

Here are some reasons why it’s challenging:

Real-Time Collaboration: The system must constantly update the document view for all users. If three people are typing in three different parts of the document, everyone should see those updates instantly. This requires a sophisticated mechanism to synchronize changes without losing any user’s edits.

Complex Document Structures: Besides plain text, Google Docs supports tables, images, headers, footers, and many other formatting options. Handling all these features in a browser-based environment, while keeping the application responsive, is complex. Each feature (like bold formatting or inserting a table) needs to be carefully managed so it works in real time for all collaborators.

Version Control and Conflict Resolution: If two people type in the same spot at the same time, the system must decide whose changes go first or how to merge them. This is not straightforward and often involves algorithms like Operational Transformation (OT) or Conflict-free Replicated Data Types (CRDTs).

Performance at Scale: Google Docs needs to handle huge documents with many pages and still remain fast. When we scroll through a large document, the application must display content quickly without freezing or causing long delays. Achieving this smooth performance takes careful optimization and sometimes specialized data structures.

Network Issues and Offline Support: Users might lose their internet connection or switch to a weaker network. The application must handle this gracefully, allowing them to continue editing offline and then synchronize changes once their connection is restored.

All these factors make the Google Docs frontend complex. It needs to be more than just a simple text editor. It has to function as a real-time collaboration tool that runs smoothly for millions of people around the world.

1.3 Key Objectives of This Design

When designing a frontend system for a Google Docs–like application, we focus on a few main goals:

Real-Time Collaboration: Ensuring that changes made by any user are quickly reflected for all other users is essential. This means we need a stable and efficient way to communicate document updates between browsers and the server.

Ease of Use and Intuitive Interface: The system should be easy for anyone to pick up and use. Text formatting, inserting images, and adding comments should all be straightforward tasks. Even advanced features like suggestion mode or track changes should be accessible without requiring deep technical knowledge.

Robustness and Reliability: Users expect their work to be saved automatically and never lost. The design should handle unexpected problems like a user’s browser crashing or a temporary network loss without losing edits. A robust system might store changes locally first, then sync them once the connection is stable.

High Performance and Scalability: The application should remain responsive even for large documents and many concurrent users. A slow, laggy editor can disrupt collaboration and frustrate users. Techniques like lazy loading or virtual scrolling can help maintain speed when documents become lengthy.

Security and Access Control: Confidential information may be stored in these documents, so the system must protect data at all times. This includes validating user permissions, preventing unauthorized access, and ensuring that malicious scripts cannot be injected into documents.

Extensibility and Feature-Rich Environment: Users often demand additional features, such as chat within the document, version history, and commenting. The design should be flexible enough to incorporate these features without major rewrites or architectural changes. This makes it easier to evolve the application over time.

By keeping these objectives in mind, we ensure that our system can offer a seamless user experience, support real-time collaboration, and remain secure and reliable. In the following sections, we will explore how to achieve these goals by diving deeper into the technical details of designing a frontend system that can power an application like Google Docs.

2. System Overview & Requirements

Designing the frontend of a system like Google Docs requires a clear understanding of what the application must do (functional requirements) and how well it must perform under various conditions (non-functional requirements).

2.1 Functional and Non-Functional Requirements

Functional Requirements are the core actions and behaviors the system must be able to perform. For an online text editor like Google Docs, these might include creating new documents, editing text (including rich formatting like bold or italics), inserting images or tables, sharing documents with others, and allowing multiple people to edit at the same time. A good way to think about functional requirements is to consider any activity a user must be able to complete, such as typing text, undoing and redoing changes, or seeing other people’s cursor positions in real time.

Beyond text editing, we also want the ability to store and retrieve documents. For instance, users should be able to open existing documents from the server or their local cache, make changes, and save these changes so they are not lost. Another key function is the commenting feature, where users can leave feedback in the document margins, and track changes, which enables suggestion mode. We might also include export functionalities, like exporting to PDF or Word format.

Non-Functional Requirements focus on qualities like performance, security, and usability. For performance, the system should remain responsive even when documents grow large or when many users are editing simultaneously. Security requirements involve ensuring only authorized users can access documents, and that sensitive data is protected from attacks (like Cross-Site Scripting). Usability requirements focus on simplicity and consistency in the user interface, making it easy for beginners to discover editing tools and for advanced users to utilize shortcuts. Reliability is another key aspect: the system should handle unexpected disconnections gracefully and preserve user data whenever possible.

2.2 Defining the Scope: Supporting File Types & Features

One of the first decisions in designing something like Google Docs is to define its scope clearly. In other words, we need to decide what kinds of documents we will support and to what extent we will support them.

Some applications allow us to create and edit only plain text files, which is simpler but limits us to basic editing features. Others, like Google Docs, can handle a variety of file types, including .docx and .pdf, and support advanced features like images, tables, footnotes, comments, and more. When we open the scope to multiple file types, we introduce additional complexity in parsing, rendering, and editing those formats.

To keep the design manageable, we might start by focusing on core text features—like bold, italics, and headings—and gradually add advanced elements such as collaborative editing, inserting images, and track changes. The more features we include, the more we have to plan for performance optimization (especially as documents grow large) and handle specialized data structures (like complex table layouts or embedded media).

2.3 Key Features of Google Docs

The main purpose of Google Docs is to allow multiple users to work on the same document at the same time in a user-friendly interface. Some of the most prominent features include:

Real-Time Collaboration: Users see each other’s changes as they happen, along with indicators like cursors or selection highlights for each collaborator.

Rich Text Editing: This covers basic and advanced formatting options, such as changing fonts, inserting bullet lists, adding headings, or styling text with bold, italics, and underline.

Document Sharing & Permissions: Documents can be shared with individuals or groups, with varying levels of access such as view-only, comment-only, or full editing rights.

Commenting & Suggestion Mode: Users can leave inline comments, reply to them, and suggest edits that the document owner can either accept or reject.

Autosave & Version History: Changes are saved automatically, and users can revisit or restore previous versions of the document.

These features guide many of the architectural decisions we will make on the frontend, such as how to structure the user interface and how to handle real-time data updates.

2.4 Handling Real-Time Collaboration

Real-time collaboration means that if one user types or deletes something, every other user viewing the same document should see that update nearly instantly. This involves continuously sending updates from the user’s browser to a backend server and receiving updates from other collaborators.

On the frontend, we need a way to capture changes quickly—often on every keystroke—and then merge or apply them to the visible document. To do this, we typically rely on technologies like WebSockets or a similar real-time communication protocol rather than simple HTTP requests because HTTP polling can introduce noticeable delays.

When two or more people type in the same part of the document, we need a conflict resolution approach. One common approach is using algorithms like Operational Transformations (OT) or Conflict-free Replicated Data Types (CRDTs), which are designed to handle concurrent edits in ways that preserve each user’s intent.

Beyond the core text, real-time collaboration also applies to features like simultaneous commenting or dynamic updates to images and tables. The frontend must manage all this data in a way that remains consistent across all user sessions, even when the edits happen at the same moment.

2.5 Challenges in Designing a Frontend-Heavy Application

A “frontend-heavy” or “client-heavy” application places much of the processing and logic in the user’s browser rather than on a central server. This can be advantageous for responsiveness and speed, but it also brings several challenges:

Performance and Rendering: As documents get larger (hundreds of pages or thousands of elements), rendering them and handling edits in real time can become slow if we do not optimize. The frontend must manage memory usage carefully and render only what the user needs to see at any moment.

State Management Complexity: Keeping track of every user’s edits and the application’s overall state (like cursor positions, highlights, and comments) is complicated. When multiple people are editing, state changes can happen in rapid succession, so we need a robust structure (like Redux or other libraries) to avoid data conflicts and ensure the UI reflects the latest updates.

Offline Support: If a user loses their internet connection, we still want them to be able to type and store changes locally, then sync those changes when they come back online. Managing this on the client side requires additional logic to detect offline status, queue changes, and handle possible conflicts when reconnecting.

Security in the Browser: The browser is exposed to potential security threats like malicious scripts or attempts to modify local data structures. We have to ensure our code can handle untrusted inputs without compromising users’ data or system integrity.

Integration with Multiple Services: Often, Google Docs-like applications need to integrate with other services (like cloud storage, identity providers, or third-party plugins). This means the frontend must be prepared to handle different types of data, authentication flows, and user interface components in a cohesive way.

While these challenges are significant, modern frameworks, libraries, and best practices can help us tackle them effectively. By carefully planning our architecture, choosing suitable data structures, and testing our application’s performance under realistic conditions, we can build a highly interactive, robust, and user-friendly real-time editor that runs smoothly in the browser.

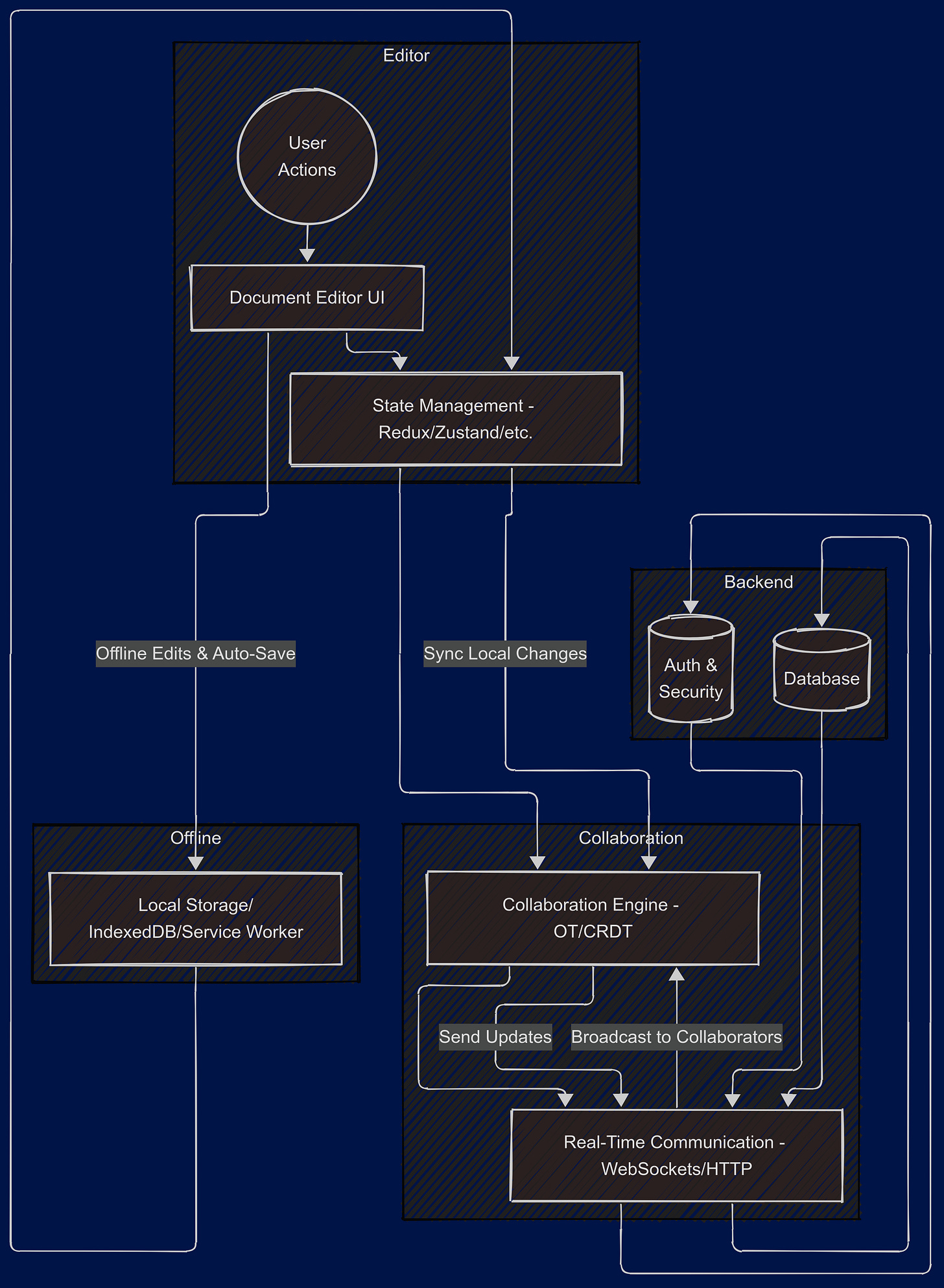

3. High-Level Architecture

3.1 Overview of the Frontend Architecture

When building a complex, real-time application like Google Docs, we need to consider how our code is structured so that it’s scalable, maintainable, and efficient. The frontend architecture typically consists of three main layers:

UI Layer (Presentation): This includes all the visual components that a user interacts with—think buttons, text fields, toolbars, and the document editor area.

State Management Layer: This manages data or “state” that different parts of the application need to access or modify, such as the current document content, user collaboration sessions, and editing settings (font sizes, color themes, etc.).

API/Network Communication Layer: This handles sending and receiving data from the server, like saving changes to the backend, loading documents, and managing collaboration events.

By separating these layers, it becomes easier to change one part without breaking another. For instance, if we decide to swap out our state management library, we don’t have to rewrite all our UI components.

3.2 Framework and Library Selection

Choosing a suitable JavaScript framework (or library) and supporting tools is crucial. Below are a few considerations:

React: A popular library for building user interfaces based on components. React’s virtual DOM and component-based architecture make it easier to break down complex UIs into smaller, reusable parts.

Vue or Angular: These are also good options, but React is often favored for large, collaborative projects due to its ecosystem of libraries and community support.

Real-Time Libraries: If we need real-time communications, we could use something like Socket.IO or integrate with any environment supporting WebSockets.

Why React?

React has a rich set of third-party libraries (for text editing, state management, etc.).

It has a strong community and many established patterns for solving common problems like collaboration and real-time updates.

React’s concept of components makes it simpler to create a modular, maintainable architecture where each piece of the UI can be worked on independently.

3.3 Component Hierarchy & UI Layer Design

A component hierarchy describes how we organize our React components into a tree-like structure. Below is an example of how we might structure a Google Docs–style editor:

App Component (Root)

Responsible for rendering the core layout, such as the navigation bar, side menu, and the main editing area.

EditorPage Component

Contains the editor itself and some additional UI elements like a toolbar (bold, italic, underline buttons, etc.).

Toolbar Component

Holds formatting buttons, font controls, and other document-related actions (saving, undo, redo).

DocumentEditor Component

The core text-editing surface. This might wrap a rich text editor library (e.g., Draft.js, Quill, ProseMirror) or a custom-built solution.

CollaborationTools Component

Manages user presence indicators, cursors, or chat if we have real-time chatting.

Key Goals When Designing the Component Hierarchy:

Reusability: Make each component do one thing well, so we can reuse it in other parts of the app (e.g., using the same Toolbar in multiple pages).

Maintainability: Keep components small and focused. A giant, monolithic component is harder to test and update.

Scalability: As the app grows, a well-organized component structure will make it easier to add new features without rewriting existing code.

3.4 State Management Strategy (Redux, Zustand, or Custom Solution)

In a real-time editing application, we have to deal with frequent state updates. For instance, user A types a word, user B types a word, user A changes formatting, and so on. Here are some common state management approaches:

Redux: A popular library that enforces a unidirectional data flow (actions → reducers → store). It’s very structured but can be verbose. Ideal for larger applications that need predictable state transitions.

Zustand: A lightweight alternative to Redux. It uses simpler concepts (like hooks) and can be easier to set up for smaller or medium-sized applications.

Context + Custom Hooks: We might rely on React Context to provide global state, combined with custom hooks for reading and updating that state. This can be sufficient for smaller-scale apps.

Other Solutions (MobX, Recoil, etc.): There are many libraries in the React ecosystem for state management. The choice often comes down to team preference and project requirements.

Why Redux or Zustand?

Redux is known for its strict structure (actions, reducers, store) and is easier to debug using tools like the Redux DevTools.

Zustand is simpler to get started with and has less boilerplate. For a big feature set like Google Docs, we might lean towards Redux because of its debugging capabilities, but Zustand is a valid choice if the team prefers a minimalistic approach.

3.5 Handling Application-Wide State for Collaboration

Real-time collaboration isn’t just about storing the document’s text—it’s also about tracking each user’s cursor position, selection range, and presence (online/offline). We may also have to manage chat messages, comments, or user permissions.

To handle this, we’ll likely have a store (e.g., Redux store) that keeps track of:

Document Content State: The actual text (and its formatting).

Collaboration State: A list of active users, their cursor positions, and any ongoing edits that haven’t been synced yet.

UI State: Things like whether the sidebar is open, or which text formatting options are selected.

This store would be updated by actions like:

USER_JOINED_DOCUMENT: A new user opens the document.

USER_MOVED_CURSOR: A user changes their cursor position.

CONTENT_UPDATED: A user types or formats text.

Every time these actions occur, they cause an update in the store, which in turn re-renders the UI with the new data.

3.6 Separation of Concerns: UI, State Management, API Communication

A clean separation of concerns helps keep the codebase understandable:

UI Components: Focus solely on displaying data and triggering events (e.g., a button that calls “boldText()”).

State Management: Receives events, updates the store, and notifies components to re-render.

API/Network Layer: Defines functions or services that communicate with the backend. For example, saveDocumentChanges(changes) or fetchDocument(docId).

This approach makes it easier to test each part independently. For instance, we can write tests for our state management logic without worrying about the UI code. We can also swap out API endpoints (for example, moving from REST to WebSockets) without rewriting our entire application.

3.7 Efficient Data Structures for Text Editing

Text editors need fast insertion, deletion, and formatting. If we use a naive data structure (like a plain string), constantly adding or removing characters in the middle could become slow. Common approaches include:

Piece Tables: Store text in “pieces” rather than one long string, making insertions and deletions more efficient.

Ropes: A binary tree structure specialized for string operations.

CRDT-based Structures (for Real-Time Collaboration): If using CRDTs, the data structure might be more complex, designed so that changes from multiple users merge automatically.

If we’re using a rich text editor library (like ProseMirror or Draft.js), these libraries often abstract away the complexity of the internal data structure. They provide an API for applying operations (insert text, remove text, change formatting) while keeping performance optimal.

3.8 Storing Document Changes Before Syncing

In a collaborative environment, we don’t always want to send every single keystroke to the server the instant it happens. Instead, it’s common to:

Collect Edits Locally: As the user types, changes accumulate in a local buffer or queue.

Batch Updates: The system sends these changes to the server either periodically (like every few seconds) or after a certain number of changes. This helps reduce network overhead.

Handle Conflict Resolution or Transformations: When multiple users are editing at the same time, we need to transform the changes so they don’t override each other. This is where techniques like Operational Transformations (OT) or CRDTs come in.

By storing changes locally before syncing, we also improve the user experience during slow network conditions. The user sees their changes instantly (because it’s all local), and if the network is temporarily unavailable, the changes can sync once the connection is restored.

4. Real-Time Collaboration & Synchronization

Real-time collaboration is the heart of an application like Google Docs. It allows multiple users to work on the same document at the same time, see each other’s changes instantly, and keep the document in a consistent state. Below, we’ll explore how to implement real-time editing, handle conflicts, ensure low-latency updates, choose the right algorithm (OT vs. CRDT), manage efficient syncing, address disconnections/offline mode, and recover unsaved changes.

4.1 Implementing Real-Time Editing

To implement real-time editing, the frontend needs a mechanism to capture user input (like keystrokes, formatting changes, or cursor movements) and then instantly share these changes with other active collaborators. This typically involves:

Listening to User Events: Whenever a user types text, deletes content, or applies a style (like bold), the editor needs to capture that event. Most modern rich-text editors (Draft.js, Quill.js, ProseMirror, etc.) provide hooks or callbacks for these actions. For instance, if a user presses the “A” key, the editor can trigger a function that records “insert character ‘A’ at position X.”

Broadcasting Changes: Once the editor captures the change, it sends this information to a shared communication layer—often a server over WebSockets. WebSockets offer a persistent connection, meaning the server and all connected clients can instantly broadcast and receive updates.

Applying Changes Locally: Each collaborator’s editor also receives these updates. When user A adds a word, user B’s editor needs to insert that word at the exact same position to maintain a consistent view. This is done by applying the incoming change to the local document model.

Visual Feedback: Changes made by other collaborators might appear with coloured highlights, distinct cursors, or name labels. This gives each user a clear view of where others are editing in real time.

Implementing real-time editing thus involves tight integration between an editor component, a state management system, and a synchronization mechanism (like WebSockets or another real-time protocol).

4.2 Conflict Resolution Strategies

Conflicts occur when two or more users try to change the same part of the document at the same time. For example, if both user A and user B insert different text at the same position, the system needs a way to reconcile these edits without losing anyone’s contribution.

Last-Write-Wins (LWW): In the simplest approach, whichever user sends their change last will overwrite the other. However, this can be frustrating for users because it might erase someone’s edits. This approach is generally not user-friendly for text editors.

Operational Transformation (OT): OT transforms incoming edits so that they apply correctly, even if the document changed between the time of the edit and the time it was received. Essentially, if two users insert text at the same position, the algorithm offsets one user’s insertion so both get included. This ensures both edits survive, preserving intent.

Conflict-Free Replicated Data Types (CRDTs): CRDTs are data structures designed to resolve conflicts automatically without a central server deciding the outcome. Each user’s changes eventually converge to the same final state across all clients. CRDTs can handle complex text operations, but might require more memory and careful implementation details.

In practice, real-time text editors most commonly use OT or CRDTs because both allow for merges that keep everyone’s changes without overwriting. We’ll discuss the choice in more detail in Section 4.4.

4.3 Ensuring Low-Latency Updates

Low latency means that when a user types a character, all other users see that character appear almost instantly. To achieve this:

Use Persistent Connections: A WebSocket connection keeps an open communication channel, so the server can push changes immediately. Traditional HTTP polling can be too slow or inefficient, introducing noticeable delays.

Minimize Data Payloads: Instead of sending the entire document whenever something changes, we only send the “delta” (the small piece of changed data, like “insert ‘A’ at position 25”). This reduces network usage and speeds up updates.

Local Optimistic Updates: Show a change in the user’s own editor right away, without waiting for a server response. The application then confirms the change once it’s processed by the server. This approach makes the user’s experience feel instantaneous, though it requires careful handling if a server rejects or modifies the change.

Efficient Rendering: On the frontend, only re-render the portion of the text that changed, rather than re-rendering the entire document. Libraries like React let’s us control which components update to ensure the UI stays responsive.

4.4 Choosing Between Operational Transformation (OT) & CRDTs

Both OT and CRDTs aim to solve the same core problem: maintaining consistency across multiple users editing the same piece of text. However, they differ in their approach:

Operational Transformation (OT):

How it Works: When a new operation arrives (such as “insert character at position X”), the system checks how many operations have been applied to the document since that edit was created. It then “transforms” the new operation so that it applies cleanly on the updated text.

Pros: Well-tested in projects like Google Docs. It’s conceptually intuitive for text editing.

Cons: Requires a central server to serialize operations or a complex peer-to-peer approach. The transformation logic can become complex for advanced features like tables or embedded elements.

Conflict-Free Replicated Data Types (CRDTs):

How it Works: Each user’s edits are stored in a data structure that can merge automatically when states conflict. Every inserted character can have a unique identifier, allowing the structure to converge on a single order.

Pros: Peer-to-peer friendly, can work offline easily, and once we understand the concept, merges can be automatic.

Cons: Might use more memory because each character can carry additional metadata. The implementation can be tricky and less intuitive at first.

Choosing one often comes down to the team’s familiarity with these approaches, the complexity of the product, and performance constraints. Google Docs historically used OT, but many modern editors are experimenting with CRDTs due to their flexibility in offline/peer-to-peer scenarios.

4.5 Efficient Change Syncing with the Backend

Even though the frontend handles much of the collaborative logic, changes still need to be persisted to a server or cloud. The key considerations are:

Batch Updates: Instead of sending every single keystroke to the server individually, we can group updates together over short intervals (e.g., every 100-200 milliseconds). This avoids flooding the server with tiny requests.

Delta-Based Syncing: Only send the “differences” rather than the whole document. This keeps network usage low.

Document Versioning: Each time the document changes, increment a version number. That way, if the server receives updates out of order, it can still apply them in the correct sequence or request a re-sync if it detects a mismatch.

Conflict Handling: The server might also apply transformations (in the case of OT) or merges (in the case of CRDTs). The server’s role is to maintain an authoritative source of truth and distribute updated states back to all clients.

4.6 Handling Disconnections and Offline Mode

Real-time apps need a solid strategy for what happens when someone goes offline or their connection drops momentarily:

Automatic Reconnect: The frontend should detect when the WebSocket connection is lost and automatically attempt to reconnect at intervals.

Local Caching of Edits: If a user is offline, the editor can store changes locally (e.g., in IndexedDB or localStorage). When the connection is restored, it sends the stored changes to the server.

UI Indication: Show the user when they’re offline or reconnecting. Possibly disable collaborative features like seeing other people’s cursors in real-time, since those updates won’t be available offline.

Merge Upon Reconnection: Once the user is back online, the system merges their offline edits with the latest document version. With OT or CRDTs, this can happen seamlessly, but it’s crucial to ensure no data is lost if there were significant changes while they were disconnected.

4.7 Recovering Unsaved Changes

Users might accidentally close their browser, lose power, or refresh the page without explicitly saving:

Periodic Autosave: The frontend can autosave every few seconds, or whenever the user stops typing for a brief moment. These autosave triggers ensure the server always has a recent state of the document.

Local Backup: Even if the connection to the server fails, keep a local backup in IndexedDB or localStorage. Upon reopening, if the system detects unsaved local changes that weren’t synced to the server, it can prompt the user to restore them.

Version History: Maintain a lightweight version history so users can roll back to a prior state if something goes wrong. This can be as simple as storing deltas or more elaborate with a dedicated versioning server.

Graceful UI Prompts: If a user tries to close the tab while unsaved changes are pending, we can show a warning message or attempt to do a quick save. Modern browsers allow this with onbeforeunload events, although some have limitations on how much we can customise these prompts.

5. Performance Optimization

Building a real-time collaborative editor like Google Docs requires careful attention to performance because the application often deals with large documents, frequent user interactions, and live updates from multiple collaborators. Below are strategies and explanations to ensure the frontend stays responsive and smooth.

5.1 Ensuring Frontend Performance for Large Documents

When users work with large documents (hundreds of pages or thousands of paragraphs), performance can degrade if the browser tries to handle everything at once. Here are some simple strategies to keep things running efficiently:

Segment the document: Instead of putting all the text in a single large container, break the document into sections (like pages or chapters). This way, the browser only processes a part of the content at a time.

Load data progressively: When a user opens a large document, consider loading only the first few sections that are immediately visible. Additional parts of the document can be fetched or rendered only when needed.

Use efficient data structures: Storing text in arrays or specialized data structures (like piece tables or rope data structures) helps ensure that insertions and deletions are handled quickly. This can prevent delays when users type or delete text.

Goal: The main goal is to avoid loading everything upfront and to minimize the workload on the browser so the editor remains fast and responsive.

5.2 Optimizing Rendering of Text Documents

Rendering is the process by which the browser takes the HTML (and associated JavaScript and CSS) and displays it on the screen. For a text-heavy application like Google Docs, rendering optimization is crucial:

Minimize DOM Nodes: Every piece of text in a document corresponds to a DOM (Document Object Model) node. If we have thousands of paragraphs each wrapped in multiple HTML tags, we could have a very large DOM tree. A large DOM slows down the browser.

Tip: Merge adjacent text nodes or reduce extra HTML tags to keep the DOM as small as possible.

Batch Updates: Instead of updating the DOM on every single keystroke, batch multiple changes together. Modern libraries like React do this batching automatically, but it’s important not to do extra renders in our own code.

Avoid Re-renders: Use libraries or built-in features (like React’s memo or PureComponent) to re-render only the pieces of the UI that have actually changed.

Goal: Ensure that when the user types or scrolls, the application updates quickly without re-rendering large portions of the screen unnecessarily.

5.3 Virtualization Strategies (e.g., react-window)

Virtualization means that instead of rendering all the content in the DOM at once, we only render what is visible plus a small buffer above and below the visible area. For example, if the user sees only 20 lines of text on the screen, we only keep 20–40 lines in the DOM, not the entire document.

react-window or react-virtualized are popular libraries that handle this automatically. They calculate which elements should be shown based on the user’s scroll position and dynamically remove off-screen elements from the DOM.

Benefits:

Reduced DOM size: The browser only deals with a small number of elements at any given time.

Faster rendering: Less content to layout and paint.

Efficient memory usage: We don’t keep thousands of paragraphs in memory if they are not currently needed.

This approach is extremely helpful if a user is working on a document with hundreds or thousands of pages because it ensures that only the visible portion is actively managed by the browser.

5.4 Efficient Undo/Redo Implementation

An editor must offer undo and redo so users can revert changes or restore them. However, storing the entire document each time can quickly blow up memory usage and slow down performance. Here’s how to handle this more efficiently:

Store Differences (Deltas): Instead of saving the entire document state, save only the differences (or “diffs”) between consecutive states. For example, if a user types the letter “A,” we store just “+A at position 100,” not the entire document text.

Use a Stack: Commonly, we keep two stacks—one for undo operations and another for redo. When the user undoes an action, we pop from the undo stack and apply it in reverse, then push it onto the redo stack.

Limit History: For performance, we might limit the history to a certain number of actions (e.g., 100). This prevents the application from storing an excessive number of changes.

This strategy ensures fast undo/redo operations without burdening the system with huge snapshots of the document at each step.

5.5 Optimizing Cursor Movement and Selection Tracking

In a live document editor, the position of the cursor and selected text must update in real-time. When multiple users are editing, each user’s cursor or selection can appear on screen. Here’s how to keep it efficient:

Use Lightweight Representations: Store a cursor position as a simple integer that marks where in the text the cursor is. For selections, store a start and end index (like [startIndex, endIndex]).

Only Update When Needed: If the cursor is stationary and the user isn’t typing, there’s no need to constantly recalculate its position. Updates only matter when the user presses keys or moves the mouse.

Batch Collaborator Updates: When receiving updates about other users’ cursors, group these updates and apply them together, instead of adjusting them one by one in rapid succession.

This approach ensures that the editor remains snappy and that the cursor and selection are accurate without draining resources.

5.6 Avoiding Unnecessary Re-Renders in React

React apps can re-render multiple times if we’re not careful. Every re-render can lead to new calculations, new layouts, and potentially slow down the app. Some tips to avoid over-rendering:

Use Memoization: React’s memo (for function components) or PureComponent (for class components) helps skip re-renders when props haven’t changed.

Optimize Redux or Other State Libraries: If we use something like Redux or Zustand, ensure that state updates are as minimal as possible. For instance, store only necessary slices of data at each component level.

Split Components: Break down large components into smaller ones. The smaller component only re-renders when its local props or state change.

Avoid Inline Functions or Objects: In React, inline objects and functions can cause frequent re-renders because references change on every render. Instead, define functions outside the render method or use useCallback.

By carefully managing how components receive and use data, we can significantly cut down on wasted re-renders.

5.7 Lazy Loading for Large Documents

Lazy loading means loading parts of our application (or data) only when needed. For a document editor:

Chunk the Document: Perhaps load the first few pages of a document right away, and then fetch more pages only when the user scrolls to the bottom of the visible content.

Deferred Rendering: We can also defer non-critical UI elements (like user avatars or certain toolbar features) until after the main editor is ready. This technique ensures the core editing experience loads faster.

Lazy loading is similar to virtualization in concept but focuses more on when data is fetched rather than how it’s displayed. Together, they help ensure the user never has to wait long to start editing or viewing the document.

5.8 Debouncing and Throttling Updates

Debouncing and throttling are techniques to control how frequently certain events trigger code execution. In a text editor, users might type very quickly, generating many events in a short time. Sending every keystroke to the server could cause network overload and slow down the client.

Debouncing: Wait until the user stops typing for a short interval (e.g., 300ms) before sending updates or doing heavy computations. This way, if the user types quickly, the function only runs once after they pause.

Throttling: Execute the update function at a set maximum rate. For instance, we might only allow one server update per second, even if the user types 10 characters in that second.

By debouncing or throttling updates, we reduce unnecessary operations and keep the editor feeling responsive. It also prevents flooding the server with too many requests at once.

6. Caching & Data Persistence

Caching and data persistence are crucial for a web-based editor like Google Docs. They help ensure that users don't lose their work, even if their internet connection fails, and they also make the app faster by avoiding unnecessary network calls. Below, we’ll discuss various strategies and tools to achieve efficient caching and data persistence in a user-friendly document editor.

6.1 Using LocalStorage, IndexedDB, or Service Workers

LocalStorage

What It Is: LocalStorage is a simple key-value storage mechanism provided by the browser. Each value is stored as a string.

When to Use: It’s best for small amounts of data, like user preferences or simple flags (e.g., “night mode” on/off).

Pros: Very easy to use, synchronous access, widely supported in modern browsers.

Cons: Limited storage space (usually around 5–10 MB), and because it’s synchronous, it can block the main thread if we store too much data.

IndexedDB

What It Is: IndexedDB is a more robust database system in the browser. It allows us to store large amounts of structured data.

When to Use: It’s perfect for storing offline data for documents, like partial or full versions of a file, because we can store complex objects and larger amounts of data than LocalStorage can handle.

Pros: Asynchronous, can handle more data, supports indexing for faster queries.

Cons: Slightly more complex API compared to LocalStorage.

Service Workers

What They Are: Service Workers run in the background, independent of our web page. They can intercept network requests and manage a cache of responses (using the Cache API).

When to Use: Ideal for offline capabilities. When the user goes offline, Service Workers can serve cached files or data so the app still works to some extent.

Pros: Excellent for building Progressive Web Apps (PWAs). Can handle offline requests and push notifications.

Cons: More complex to set up and maintain, requires a careful design to handle multiple caches and updates.

Overall, choosing the right storage mechanism often involves using a combination of these tools. For example, we might cache static assets (like images or JavaScript bundles) using Service Workers, keep important user data (like partial document edits) in IndexedDB for offline access, and store quick flags or states (like user settings) in LocalStorage.

6.2 Implementing Efficient Autosave Functionality

Autosave is the process where changes in a document are saved automatically without requiring the user to press a “Save” button. This feature is critical for avoiding data loss and improving user experience.

Capturing Changes

In a document editor, every keypress or formatting change can generate an “edit event.” Instead of sending every single event to the server in real-time, we can accumulate these edits in a small buffer in the frontend.

This buffer will temporarily store changes (e.g., typed characters, deletions, formatting commands, etc.).

Saving at Intervals or Triggers

Timed Interval: We can save changes every few seconds (e.g., every 5 seconds). If the user is actively typing, the system automatically collects edits and commits them to the backend after this interval.

Idle Detection: Another approach is to save when the user stops typing or becomes idle. This can reduce the load on the server because we’re not sending updates while the user is actively typing.

Combination: Most modern solutions use both a short timed interval and an idle check for optimal performance and reliability.

Local Caching Before Server Sync

To handle sudden disconnections, we can store the latest edits in IndexedDB or LocalStorage (if small) every time we perform an autosave action.

Once the connection is re-established, these cached edits are synced with the backend, ensuring minimal data loss.

By blending interval-based saving with local caching, we can create a robust autosave mechanism that protects users from accidentally losing their work.

6.3 Caching Strategy for Reducing API Calls

For a tool like Google Docs, the user might open and edit multiple documents frequently. Repeatedly fetching the same data from the server can increase loading times and waste bandwidth.

Document Metadata Caching

Instead of fetching a complete list of documents every time, we can store document metadata (like titles, last-modified times, owner info) in a local cache (IndexedDB or LocalStorage).

When the user opens the app, we check our local cache first. If the cached data is recent, we avoid extra server calls.

Partial Document Caching

For large documents, we might cache the most recently accessed portions, such as the first few pages or the most recent edits, in local storage.

This way, the editor can load instantly while additional data is fetched in the background.

Intelligent Invalidation

A good caching strategy includes a way to invalidate or update cache entries when they become stale.

For instance, if a document was edited on another device, we should detect that (e.g., through a version identifier) and refresh the local cache to stay consistent.

By carefully deciding what data to cache and when to invalidate it, we can significantly reduce the number of redundant API calls and make the application more responsive.

6.4 Document Preloading for Faster Access

Document preloading is a technique where the application anticipates the user’s next actions and starts fetching data proactively.

Predictive Loading

If a user is browsing a list of documents, the system might pre-fetch the first page or summary of the next likely document they will open (based on recent activity or user behaviour).

For instance, if the user is currently in a folder with several documents, we might preload metadata or partial data for those documents in the background.

Immediate Availability

By the time the user clicks on a document, most (or all) of its data is already in local storage or the cache, allowing the editor to open it almost instantly.

Balancing Bandwidth and Performance

Preloading should be done carefully to avoid using too much bandwidth, especially on mobile networks.

A common approach is to preload only small chunks of data (metadata, partial contents) and load the rest only if the user actually opens the document.

Preloading is a balancing act: it can dramatically speed up the user experience but must be controlled to prevent unnecessary data usage.

6.5 Handling Document Versioning

Versioning ensures that users can revert to previous states of a document and that multiple versions (or forks) of a document can be maintained without confusion.

Why Versioning Matters

It allows users to see the document’s history (revision history).

In real-time collaboration, multiple users may be making changes simultaneously. Versioning provides a systematic way to track these changes.

Version Identification

Each significant state of the document can be assigned a version identifier (e.g., a timestamp or a unique version number).

When new edits come in, the system creates a new version while still retaining the old one.

Delta Storage vs. Full Snapshots

Full Snapshots: Store the entire document as a separate copy for each version. This is simpler but consumes more space.

Delta Storage: Store only the differences (edits) between versions. This saves space but is more complex to implement.

Many modern solutions use a hybrid approach where we occasionally store a full snapshot (e.g., once an hour or once a day) and store deltas in between. This prevents needing to recalculate too many deltas if we want to revert to an older version.

Frontend and Backend Collaboration

While the backend is primarily responsible for maintaining official versions, the frontend should be aware of the current version (or revision) it’s editing.

When the user saves or autosaves, the frontend sends its version identifier along with the changes. This helps the backend detect if the user is editing an outdated version and merge changes appropriately.

By designing a clear versioning system, we give users the freedom to explore different revisions of a document safely and also provide an essential foundation for real-time collaboration and conflict resolution.

7. Security & Access Control

Building a secure environment in a real-time collaborative system like Google Docs is crucial to protect users’ data and maintain trust. In this section, we will explore how to secure user data, prevent common vulnerabilities, manage permissions, and maintain robust authentication.

7.1 Securing User Data in the Frontend

Securing user data on the frontend involves ensuring that sensitive information is never exposed to unauthorized parties. While most security measures happen on the backend (e.g., storing data in secure databases), the frontend still has vital responsibilities:

Using HTTPS (TLS/SSL):

Always communicate over a secure HTTPS connection. This encrypts the data traveling between the user’s browser and the server, preventing eavesdropping or data tampering.Avoid Storing Sensitive Data in Plain Text:

If we need to store temporary user information (like tokens) in the browser, consider secure storage mechanisms:HTTP-Only Cookies: Tokens stored in HTTP-only cookies cannot be accessed by JavaScript, reducing the risk of certain attacks.

Local Storage or Session Storage: If we must use local or session storage, ensure we don’t store highly sensitive data for long. We should also protect against Cross-Site Scripting (XSS) that might try to steal this data.

Protecting Against Content Leaks:

Make sure our application doesn’t inadvertently leak data in places like:URL Query Parameters

Browser console logs

Error messages or stack traces

By keeping data encrypted in transit, avoiding plain-text storage, and minimizing leaks, we significantly reduce the risk of compromised user data on the frontend.

7.2 Preventing XSS and CSRF Attacks in a Real-Time Editor

Cross-Site Scripting (XSS) and Cross-Site Request Forgery (CSRF) are common security threats for web applications, especially those that handle user-generated content in real time.

Cross-Site Scripting (XSS)

What is XSS?

XSS occurs when an attacker injects malicious scripts (JavaScript) into a web page that other users view. If our editor doesn’t sanitize or validate user input, an attacker could insert harmful scripts into the document.How to Prevent It

Input Sanitization: Whenever a user enters text (or any data), make sure we remove or encode any suspicious code. Many rich text editors (like ProseMirror, Draft.js, or Quill) have some built-in sanitization, but we should still validate all content before it’s rendered.

Escape User-Generated Content: When displaying content in the browser, escape HTML tags so that they render as text rather than executable code.

Content Security Policy (CSP): Configure a strict CSP in our application settings. This can prevent inline scripts from running and restrict the sources from which scripts can load.

Cross-Site Request Forgery (CSRF)

What is CSRF?

CSRF occurs when a malicious website tricks a user into performing actions on another site where they are already authenticated. For example, if we’re logged into Google Docs, and we visit a harmful website, that website might try to make requests on our behalf to Google Docs.How to Prevent It

CSRF Tokens: The server generates a random token and includes it in each HTML form or request. The application verifies the token on every write/update request. If the token is missing or invalid, the request is rejected.

SameSite Cookies: Setting cookies as SameSite=strict or SameSite=lax helps prevent them from being sent with cross-site requests, reducing CSRF risk.

By combining robust input validation, output escaping, token-based verification, and a solid Content Security Policy, we can greatly reduce the risk of XSS and CSRF in our real-time editor.

7.3 Role-Based Access Control (RBAC) for Editing, Commenting, and Viewing

Role-Based Access Control ensures different users only have the permissions that match their roles. For a document editor like Google Docs, common roles might include:

Owner: Full control over the document, including setting permissions for others.

Editor: Can modify the document content.

Commenter (or Reviewer): Can view and add comments but cannot edit.

Viewer (Read-Only): Can only read the document.

Implementation Approach:

User and Permission Mapping:

Each user is mapped to a role for a specific document. For instance, a user’s role might be “Editor” for Document A but only a “Viewer” for Document B.Frontend Enforcement:

The application checks the user’s role and shows/hides UI elements based on their permissions (e.g., show the “Edit” button only to Editors or Owners).API-Level Enforcement:

Even if the user manipulates the UI in the browser, requests to edit or delete content should be verified on the server to ensure the user truly has the right role. This prevents malicious attempts to bypass the frontend.

RBAC helps maintain an organized permission structure so that only authorized individuals can modify or view certain data.

7.4 Ensuring End-to-End Encryption for Documents

End-to-end encryption (E2EE) means the document is encrypted on the user’s device and remains encrypted in transit and at rest, so not even the server can read it unless specifically allowed.

How It Works:

Key Generation: Each document could have its own encryption key. When a user creates a document, the system generates a unique key locally in the browser.

Encryption Before Upload: Before any content is sent to the server, the editor uses this key to encrypt the text. The server only stores or transmits encrypted data.

Decryption on Download: When a collaborator opens the document, the key must be securely shared (often through separate channels or using the server with additional security) so that the content can be decrypted in their browser.

Challenges with E2EE:

Key Management: Ensuring only authorized users get access to the decryption key can be complex.

Search and Indexing: If the content is fully encrypted, the server can’t index it or perform full-text searches easily unless additional mechanisms are used.

Despite the complexity, E2EE is a strong measure for protecting sensitive documents against unauthorized access—even if the server is compromised.

7.5 Authentication and Authorization (OAuth, JWT)

To control who can access a document (and what actions they can perform), we need robust authentication and authorization.

Authentication: Confirming the user’s identity.

Authorization: Verifying that the user has permission to perform an action.

Common Methods:

OAuth 2.0:

Typically used when we’re integrating with external identity providers such as Google, Facebook, or Microsoft.

The user logs in using the external provider, and our app receives an access token that proves the user’s identity.

JSON Web Tokens (JWT):

A self-contained token that includes user information, expiration time, and signature.

The token can be stored in a secure HTTP-only cookie or local storage.

The server verifies the signature on each request to ensure the token is valid.

Implementation Flow:

The user logs in through OAuth or our custom login form.

The server issues a JWT or session ID.

The frontend stores this token safely (ideally in an HTTP-only cookie).

For each subsequent request (e.g., saving a document), the token is sent to prove the user’s identity.

The server checks the token, determines the user’s roles/permissions, and either grants or denies access.

By combining proper authentication with RBAC, we ensure that only legitimate, correctly permissioned users can access or modify documents.

7.6 Preventing Malicious Script Injections in a Rich Text Editor

Real-time document editors often allow users to embed rich text, images, and potentially other media. This freedom can be dangerous if not handled carefully, because malicious users can try to inject harmful scripts or iframes.

Sanitizing User Input:

Use libraries that sanitize and clean up HTML content before it is rendered. For example, any tags like <script> should be removed, and attributes like onerror or onload in images should be stripped.Limiting Allowed HTML Elements:

We can maintain a whitelist of safe HTML tags (<b>, <i>, <u>, <p>, etc.) and disallow everything else. Similarly, we can restrict certain attributes (like style or href) to ensure no hidden scripts are embedded.Using Iframes Safely:

If we allow iframes, make sure they are sandboxed (using the sandbox attribute) so they can’t execute arbitrary JavaScript or interact with the parent page.Real-Time Checks:

In a live collaboration scenario, each user’s changes should be validated or sanitized on the client before sending them out to other collaborators. The server can then double-check this sanitization as well.

8. Collaboration Features

Collaboration features are what make Google Docs more than just a text editor. These features enable multiple people to communicate, share ideas, suggest changes, and track progress—all in real-time. Below, we’ll dive into each major collaborative feature in detail.

8.1 Implementing Commenting and Annotation

What is it?

Commenting and annotation let users highlight parts of the document and leave notes or feedback for others. For instance, we might select a sentence and comment, “Can we simplify this phrase?” or “Need a source here.” Annotations often appear as highlights in the text, with a small panel or popup displaying the feedback.

Key Considerations:

Selecting Text Ranges:

When a user highlights text and clicks “Add Comment,” the system needs to record the exact start and end points of that highlight in the document. This is often done by tracking the character indices or storing a unique identifier for each segment of text.

Frontend Data Structure:

We might store comments in a dedicated data structure that associates a text range with the comment content. For example:

{

"commentId": "unique-id",

"range": { "start": 250, "end": 272 },

"text": "This is the highlighted part",

"author": "User123",

"message": "Need to clarify the meaning",

"timestamp": 1678892200

}These objects can be kept in the application state (e.g., in Redux or a local state management library).

Displaying Comments:

The editor UI can visually mark the highlighted section. When the user hovers or clicks on that highlight, a small popup or side panel can appear showing the comment details.

A separate side panel can list all comments in chronological order or grouped by paragraph.

Real-Time Updates and Resolving Comments:

As soon as a comment is created or updated, those changes should be sent to other users in real-time so they can see the feedback immediately.

If a comment is resolved (marked as complete), it should be hidden or shown as “resolved” for everyone else.

How it Works in Practice:

User A highlights text in the document and clicks “Add Comment.”

A small form appears to type in feedback.

Upon saving, the highlighted text changes color, and the new comment is stored in a shared state that’s synced with the server (e.g., via WebSockets).

User B sees the comment appear in near real-time. They can reply or resolve it.

Any resolution or reply is also synced so that User A sees it immediately.

8.2 Designing an In-Document Chat System

What is it?

An in-document chat is a live chat feature embedded in the editor interface. It allows collaborators to discuss ideas or issues without leaving the document or using a separate tool.

Key Considerations:

Chat UI Placement:

Often, the chat appears in a collapsible sidebar or a small popup window so it does not obstruct the document. The placement should be intuitive (e.g., a “Chat” icon in the top-right corner).

Real-Time Messaging:

Messages should appear instantly (or as close to instantly as possible) for all participants. This is typically done using a real-time protocol like WebSockets.

Each message can contain sender info (avatar, name), timestamp, and the message text.

Backend and Storage:

Messages could be temporarily stored in the frontend state. At the same time, they are sent to the server and broadcasted to other users’ clients.

A simple data structure might look like:

{

"messageId": "unique-id",

"author": "User123",

"content": "Hello everyone!",

"timestamp": 1678892400

}The server can store the chat history so that new users joining the document can see previous messages.

Notifications and Presence:

If a new message arrives while someone is not looking at the chat, a small notification can appear.

“Presence indicators” can show which collaborators are online and viewing the document.

How it Works in Practice:

User A opens the chat panel and types a message: “Let’s move section 2 to the top.”

The frontend sends this message via WebSockets to the server.

The server immediately relays the message to all connected clients.

User B sees the message appear in the chat panel. They can respond without leaving the document.

8.3 Displaying Cursors and Selection Highlights for Multiple Users

What is it?

When multiple users work on the same document, it’s helpful to see exactly where each person’s cursor is, as well as any text they have highlighted. This feature makes collaboration more transparent and reduces confusion about who is editing what.

Key Considerations:

Tracking Cursor Position:

Each user’s cursor can be identified by a unique color or name tag.

The application needs to communicate cursor position changes to the server (e.g., every time the user moves their cursor with arrow keys or a mouse click).

Updating Other Clients:

When the server receives a cursor update, it broadcasts the new position to other connected clients.

Those clients then render a small cursor icon (often with the user’s name or avatar).

Highlighting Selections:

If a user selects a range of text (e.g., from character 100 to 120), that range can be highlighted in a semi-transparent color unique to the user.

Similar to cursor position, these selections need to be shared in real-time.

Performance Considerations:

Cursor movements can change many times per second. To avoid overloading the network, we might throttle or debounce updates so they only happen when necessary (e.g., after a slight pause).

How it Works in Practice:

User A clicks on line 10, moving their cursor to that position.

The editor captures the new cursor position and sends it to the server.

The server relays the position to other users.

User B’s browser receives this update and draws a small cursor icon at the specified location, labeled “User A.”

If User A selects text, the highlight range is communicated in a similar manner.

8.4 Implementing Track Changes (Suggestion Mode)

What is it?

Track Changes, often called “Suggestion Mode,” is a feature that logs every edit (insert, delete, format change) as a suggestion, rather than committing it immediately. Collaborators can then review each change, accept or reject it, and leave comments on individual suggestions.

Key Considerations:

Capturing Edits:

Each keystroke or formatting change can be recorded along with metadata, like which user made the change, when it was made, and the original vs. updated text.

The system might need to store these edits as separate “diff” objects, such as:

{

"changeId": "unique-id",

"author": "User123",

"timestamp": 1678892600,

"oldText": "Hello",

"newText": "Hi",

"range": { "start": 50, "end": 55 }

}Visual Representation:

Inserted text might appear in a different color or underlined to show it’s a suggestion.

Deleted text might appear with strikethrough, often in red, to indicate it’s proposed for removal.

Accepting or Rejecting Changes:

Users can hover over a suggested change to see who proposed it and click “Accept” or “Reject.”

When accepted, the suggestion becomes part of the final text. When rejected, the text reverts to the original.

Synchronization:

As soon as a change is made, it should appear in real-time for other collaborators.

Accept/Reject actions should also sync so that everyone sees the updated document.

How it Works in Practice:

User A toggles “Suggestion Mode” on and types “Hello” into the document.

The system records an “insert” suggestion from User A. The new text appears highlighted or underlined.

User B sees the suggestion appear in their view. They can click on it, see it’s from User A, and choose to accept or reject.

If User B clicks “Accept,” the text becomes regular (non-highlighted), and the old version disappears.

8.5 Implementing @Mentions and Notifications

What is it?

@Mentions allow us to tag a specific person in a comment or in the document text, which usually triggers a notification to that person. This makes it easy to get someone’s attention or ask for input.

Key Considerations:

Detecting the “@” Character:

While typing a comment or in a chat box, when a user types “@,” the system can open a dropdown menu of possible people to mention.

This requires fetching a list of collaborators or user contacts, often via an API.

Linking to a User Profile:

Once a mention is selected (e.g., “@User B”), the system stores metadata linking that mention to a user ID.

The mention text might show up as clickable to reveal the user’s info or send them a direct notification.

Notifications:

Typically, a notification system will either email the tagged user, send them a push notification, or highlight the mention in the UI.

On the frontend, we might maintain a small notification center or badge indicating unread mentions.

Real-Time Updates:

If user B is online at the same time and is tagged in a comment, they can see it instantly pop up, possibly with a beep or visual alert.

How it Works in Practice:

User A types a comment: “@User B, can we clarify this section?”

The system recognises the “@” symbol, shows a dropdown, and User A picks User B’s name.

The mention data is saved in the comment object, and a real-time message is sent to User B’s client.

User B sees a notification appear in the document or receives an email/push notification, depending on their preference.

8.6 Efficient Edit History & Revision Tracking

What is it?

Edit history (or revision history) allows users to view past versions of the document. They can see who made changes, when those changes were made, and what was changed. Some systems allow restoring the document to an earlier version.

Key Considerations:

Versioning Strategy:

A common method is to store “snapshots” of the document at significant points (like every few minutes or when the user manually requests).

Alternatively, we can store every change (or “delta”) so we can reconstruct any version by applying these deltas in sequence.

Retrieving Past Versions:

When a user requests a previous version, the system either:

Loads a past snapshot directly, or

Replays the changes from the original version up to that point in time.

For large documents, storing every single version as a full snapshot can be expensive. Hence, a delta-based approach is often more space-efficient.

UI for Revision History:

Typically, a “History” panel can show a timeline of edits. Clicking a specific timestamp or version ID reveals how the document looked at that moment.

Users can compare two different versions or restore an older version entirely.

Real-Time Collaboration Impact:

Because multiple people can edit at once, the revision history has to capture merges of all those edits correctly. This is why robust real-time synchronisation methods (e.g., OT or CRDT) are crucial; they keep a consistent record of all changes.

How it Works in Practice:

User A and User B edit the document simultaneously, generating a sequence of changes or version checkpoints.

The system stores these changes on the server with timestamps and user IDs.

When User A opens the revision history view, they see a chronological list of changes.

Clicking on a past revision loads that version’s content into a read-only mode, allowing User A to decide whether to revert or simply inspect it.

9. Offline Mode & Synchronization

9.1 Designing an Offline-First Editing Experience

An offline-first approach means we assume our users might go offline at any time, and we design the system to handle this gracefully. In practical terms:

Local Storage of Document Data

When a user opens a document, the editor immediately caches the document data on their device. A common choice for storing this data is IndexedDB in the browser. IndexedDB is preferred over localStorage for large text data because it can handle bigger chunks of data more efficiently.

This offline storage contains not just the initial document content but also any in-progress edits or changes the user makes.

Immediate Document Interaction

Once the editor has the document data locally, the user should be able to edit without waiting for a round-trip to the server. This makes the user experience fast and fluid, even if the network is slow or temporarily unavailable.

UI Feedback for Connectivity

The editor can display a simple status indicator—for example, a small icon or label that says “Online” or “Offline.” When the internet connection is lost, it can switch to “Offline” mode, reassuring the user that changes are still being saved locally and will be synced later.

Service Workers (Optional but Helpful)

Service Workers allow us to intercept network requests and serve cached assets (HTML, CSS, JS) or even data from IndexedDB. This ensures the editor loads instantly even when the user is offline.

They also enable background syncing once the connection is restored, meaning we don’t have to rely solely on the active browser tab to push changes.

9.2 Syncing Offline Changes with the Server

Once the user regains connectivity, the application must synchronize all offline edits with the server. The primary challenge is to merge changes made offline with any changes that might have occurred on the server in the meantime.

Local Queue of Changes

Each time the user makes an edit while offline, this edit is recorded in a local queue stored in IndexedDB or a similar offline storage mechanism.

Instead of storing the entire document repeatedly, it’s more efficient to store small patches or diffs that represent what changed in the document.

Check for Connectivity

When the browser detects that the internet is back (for example, through an event listener on window.ononline or by periodically pinging the server), the application attempts to send these queued changes to the server.

If the server responds that it’s receiving changes successfully, the local queue can start clearing out the edits that have been acknowledged by the server.

Pull Latest Server State

While offline, someone else might have edited the same document. So, after pushing our local changes, we also pull the latest version from the server.

This is where conflict resolution enters the picture. The server might say, “Hey, I’ve got a different version of the document than we do.” The application then merges those changes with the local version.

Visibility to the User

The editor can briefly show a “Syncing…” message or animation, so the user knows the system is connecting and sending updates.

Once syncing is complete, the status can switch to “All changes saved.”

9.3 Handling Conflicts Between Offline and Online Changes

Conflicts occur when multiple users edit the same portion of a document at the same time, or when we come back online and discover that the server has a different state than our local copy. Handling conflicts in a user-friendly way is crucial.

Operational Transformations (OT) or CRDTs

One way to handle conflicts is through Operational Transformation (OT), a technique where each edit is represented as an operation (e.g., “insert character X at position Y”). OT servers keep track of operations from each user, transform them so they remain compatible with other simultaneous edits, and produce a final consistent state.

Another option is Conflict-free Replicated Data Types (CRDTs), which can merge changes from multiple concurrent editors automatically without requiring a central server to transform operations.

User Notification vs. Automatic Merge

Some conflicts can be safely merged automatically (like two different users inserting text at different places in the document).

If two users edit exactly the same text, the system might have to show a merge conflict resolution UI. In practice, advanced editors (like Google Docs) rarely show a direct conflict interface; instead, they handle it in real time with more sophisticated algorithms.

Versioning and Revisions

It’s often helpful to maintain a history of versions. If the merge doesn’t turn out the way a user expects, they can revert to a previous state. The presence of revision history makes conflicts less scary because we can always undo or roll back undesired changes.

9.4 Delta-Based Synchronization Mechanism

A delta-based synchronization mechanism is crucial for both performance and reliability. Instead of sending the entire document on every change, deltas (or patches) contain only the minimal changes that occurred since the last known state.

What is a Delta?

A delta is a compact representation of changes, such as “User inserted the word ‘hello’ at index 50” or “User removed 5 characters at index 30.”

These deltas can be small JSON objects or specially formatted messages. The idea is to send as little data as possible over the network.

How Deltas Work in Real-Time

In real-time editing, every keystroke (or batched collection of keystrokes) generates a delta. For instance, typing “Hi!” could generate multiple deltas—one for H, one for i, one for !—but we can batch them into a single delta if we want fewer network calls.

When the server receives a delta, it applies it to the current document version on the server. Simultaneously, other connected clients receive the delta and apply it to their local copies.

Offline Scenario with Deltas

While offline, our deltas are stored locally in a queue. When we come back online, these deltas are sent to the server in sequence.

The server merges them with any deltas that arrived in the meantime from other users, ensuring a consistent final document.

Benefits of Delta-Based Approach

Efficiency: Sending just the changes is significantly lighter than sending the entire document. This makes real-time updates faster and reduces bandwidth usage.

Conflict Handling: Using OT or CRDT algorithms on top of deltas is more straightforward than trying to reconcile entire document states repeatedly.

Scalability: Delta-based synchronization scales better because the server deals with relatively small messages instead of massive text payloads.

10. Scalability & Multi-Tenancy

10.1 Scaling to Support Millions of Concurrent Users

Scaling our frontend system to handle millions of users at the same time involves multiple strategies that ensure both reliability and speed. At its core, we want to make sure that no single server or data center becomes a bottleneck for users trying to open and edit documents.

Distributed Infrastructure:

One way to handle high traffic is to distribute our servers and data storage across multiple regions. For instance, we can place servers in different geographical areas, such as North America, Europe, and Asia. This allows users to connect to the server closest to them, reducing latency (the delay before data starts to move).Content Delivery Networks (CDNs):

A CDN is like a network of proxy servers spread out around the world. When a user opens our Google Docs frontend, all the static files (HTML, CSS, JavaScript) can be delivered from a location near them. This decreases load time since data travels a shorter distance.Load Balancing:

Load balancers act like traffic cops, directing user requests to the least busy server. This ensures that no single server is overwhelmed by user requests while others sit idle. When we have load balancers in place, we can easily add more servers during peak times (horizontal scaling).Efficient Frontend Caching:

Using browser caches and service workers, we can temporarily store certain assets or even partial data on a user’s device. This strategy reduces the number of requests made to the server, which is crucial during high-traffic situations.Optimizing Code and Minimizing Payloads: